Chuanruo Ning

I’m a second-year CS PhD student at Cornell University working with Prof. Wei-Chiu Ma and Prof. Kuan Fang. I obtained my bachelor degree from Turing class at Peking University. I’m interested in leveraging 3D information to help robot manipulation. I was fortunate to have worked with Prof. Alan Yuille, Prof. Hao Dong, and Dr. Kaichun Mo.

Research

- Prompting with the Future: Open-World Model Predictive Control with Interactive Digital Twins

- RSS 2025

- Chuanruo Ning, Kuan Fang†, Wei-Chiu Ma†

- Project Page / Code

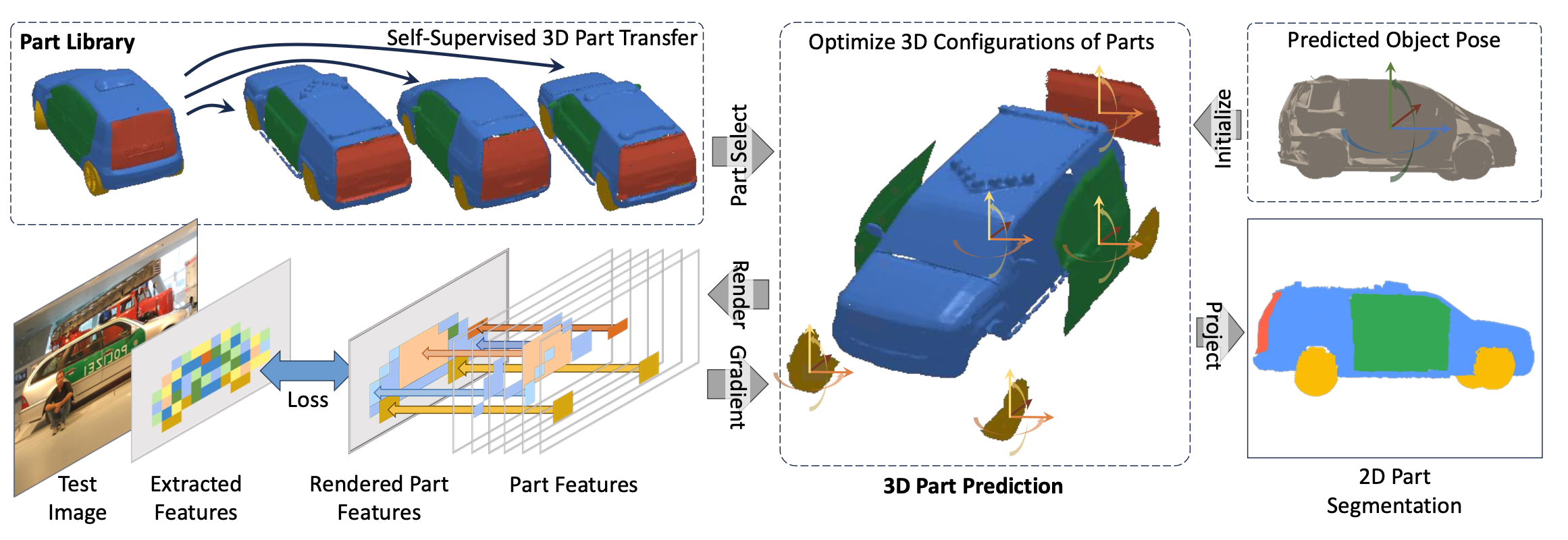

- Part321: Recognizing Object Parts in 3D from a 2D Image Using 1-Shot Annotations

- Under review

- Chuanruo Ning*, Jiawei Peng*, Yaoyao Liu, Jiahao Wang, Yining Sun, Alan Yuille, Adam Kortylewski, Angtian Wang

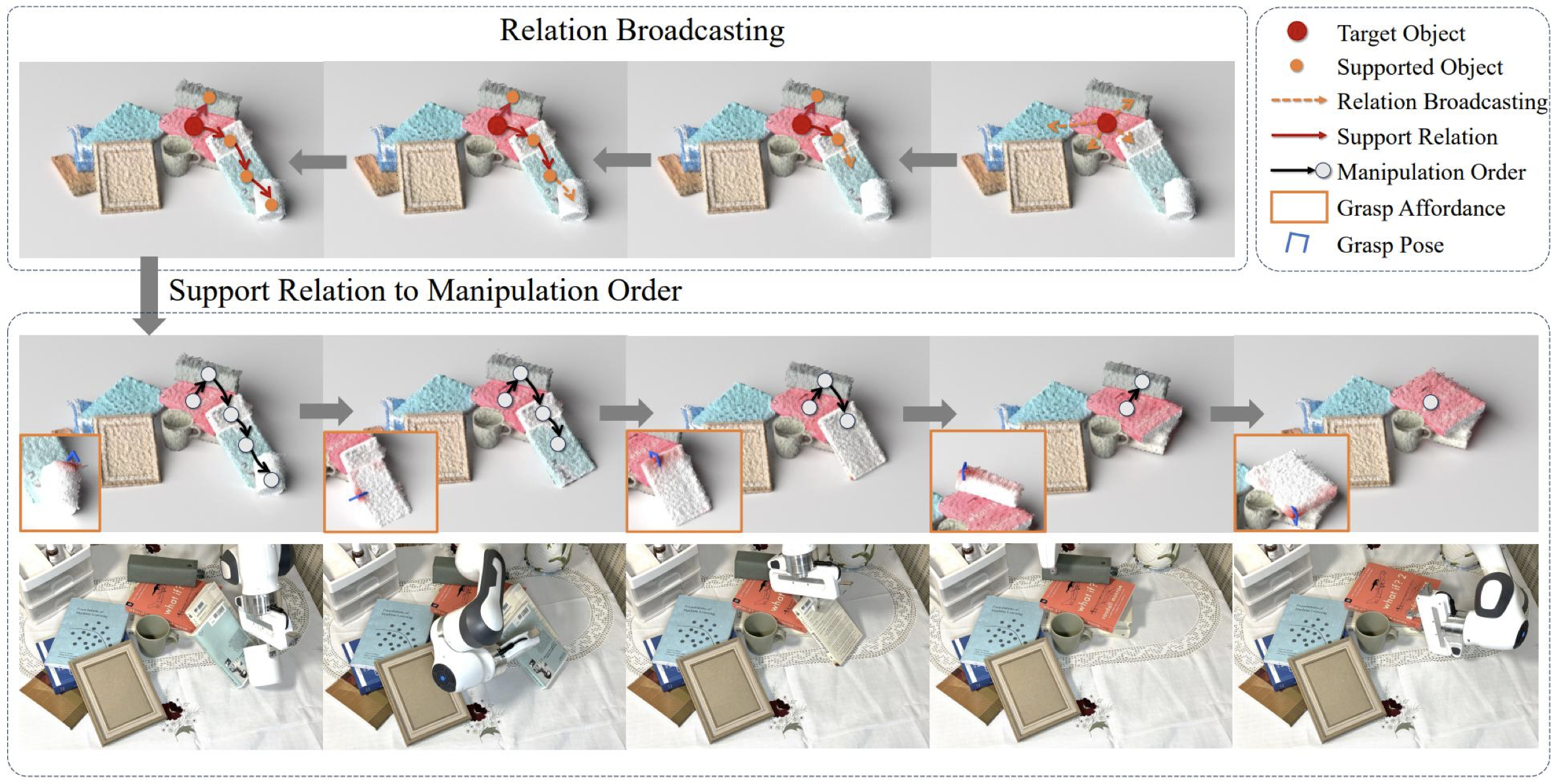

- Broadcasting Support Relations Recursively from Local Dynamics for Object Retrieval in Clutters

- RSS 2024

- Yitong Li*, Ruihai Wu*, Haoran Lu, Chuanruo Ning, Yan Shen, Guanqi Zhan, Hao Dong

- Paper / Project Page (coming soon)

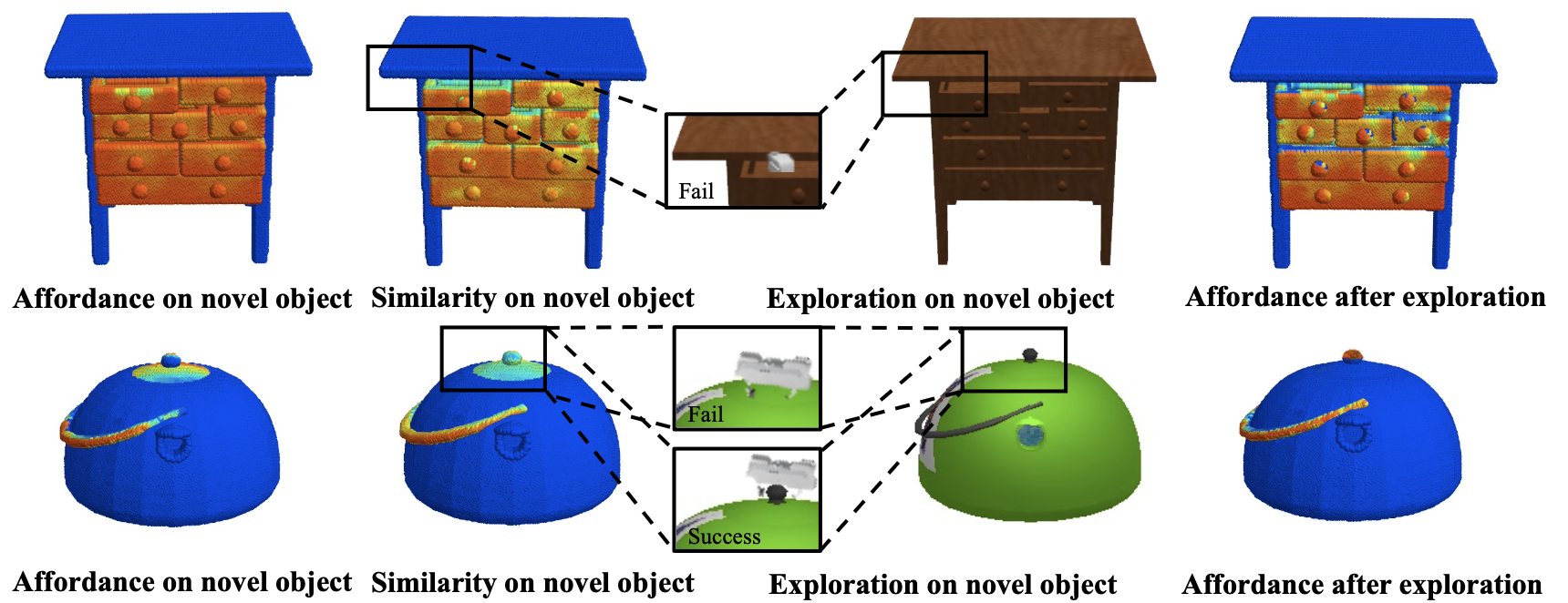

- Where2Explore: Few-shot Affordance Learning for Unseen Novel Categories of Articulated Objects

- NeurIPS 2023

- Chuanruo Ning, Ruihai Wu, Haoran Lu, Kaichun Mo, Hao Dong

- Paper / Project Page

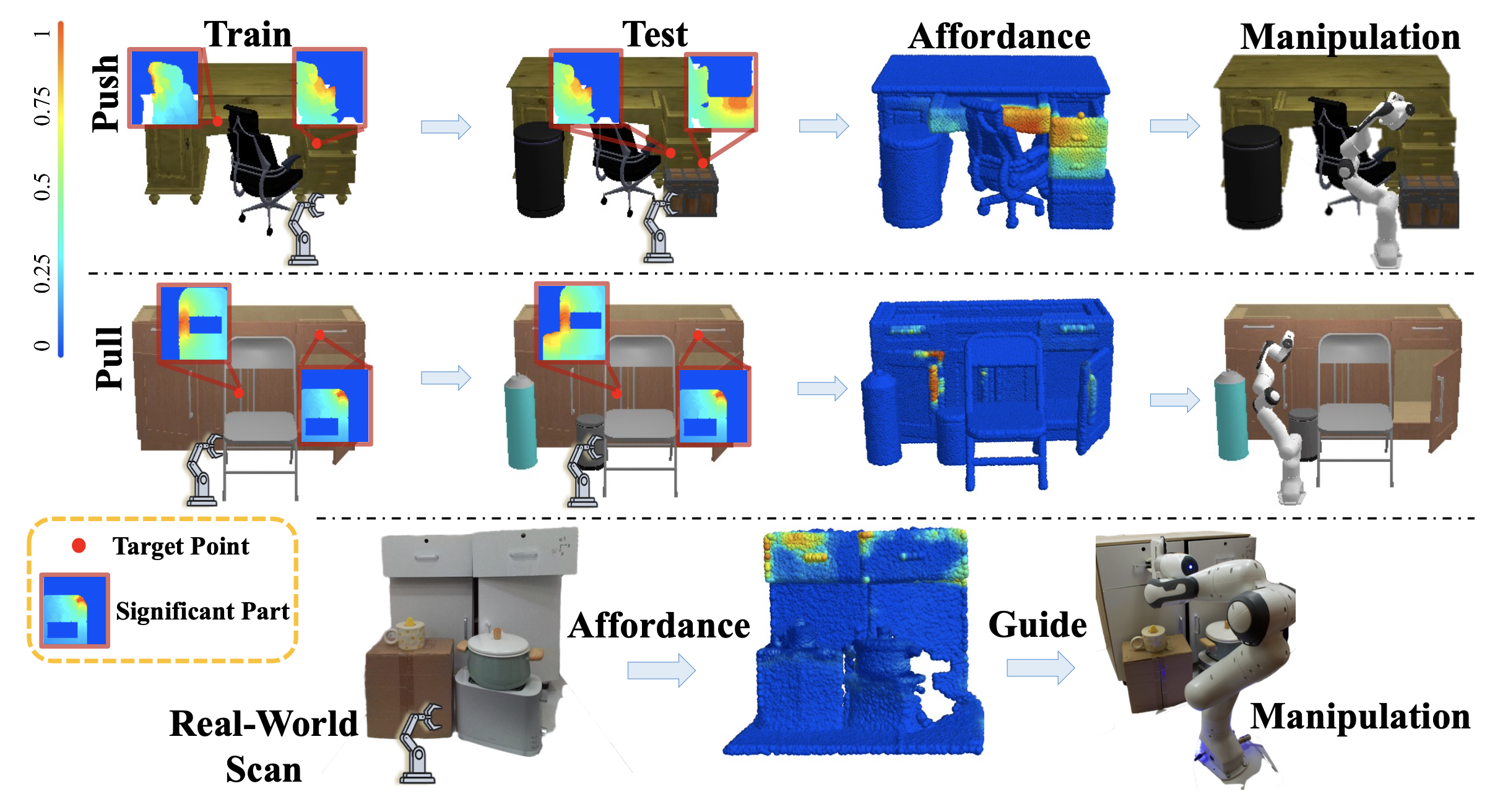

- Learning Environment-Aware Affordance for 3D Articulated Object Manipulation under Occlusion

- NeurIPS 2023

- Ruihai Wu*, Kai Cheng*, Yan Shen, Chuanruo Ning, Guanqi Zhan, Hao Dong

- Paper / Project Page

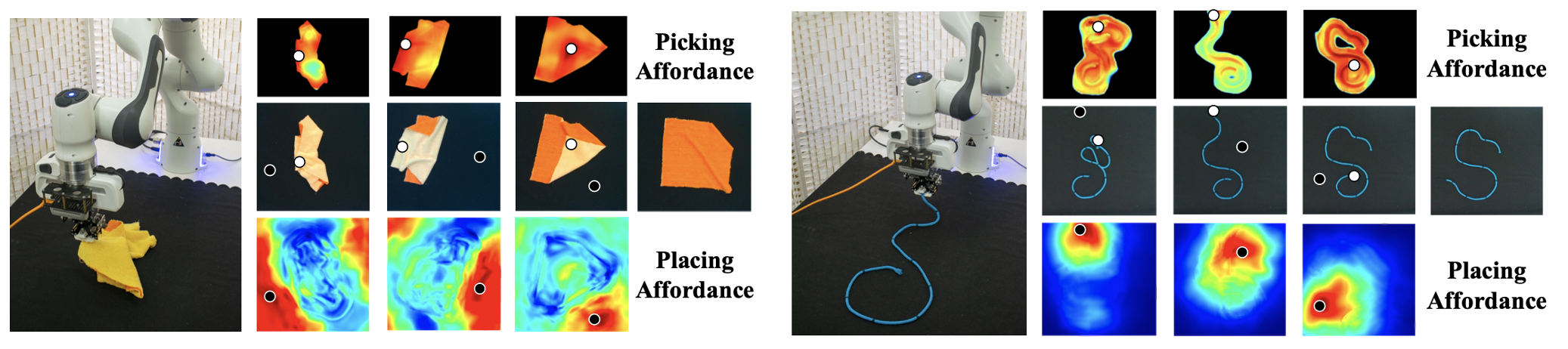

- Learning Foresightful Dense Visual Affordance for Deformable Object Manipulation

- ICCV 2023

- Ruihai Wu*, Chuanruo Ning*, Hao Dong

- Paper / Project Page / Video / Video(real-world)

Talks

Title: Part Detection via Render-and-compare Method

Date: 2023-8-18

Location: Malone Hall, Johns Hopkins University, Baltimore, United States

Slides

Title: Occlusion Reasoning for Manipulation

Date: 2022-8-4

Location: Center on Frontiers of Computing Studies, Beijing, China

Slides

Title: In-hand Reorientation

Date: 2022-2-20

Location: Center on Frontiers of Computing Studies, Beijing, China

Slides

Services

- Program Committee: Annual AAAI Conference on Artificial Intelligence (AAAI 2024)

- Reviewer: Conference on Computer Vision and Pattern Recognition (CVPR 2024)

Awards and Honors

- 2023: Huatai Securities Scholarship

- 2023: Peking University Merit Student

- 2022: John Hopcroft Scholarship

- 2022: Peking University Dean’s Scholarship

- 2020: Peking University Freshman Scholarship